Unpacking crucial elements of Solana design in a friendly manner — Proof of History.

Author: Mark Leontev

Big shoutout to @SeanButta and @lostin for their early reads and invaluable insights on the article.

This article marks the beginning of Part 2 in our broader Solana Thesis series. For previous readings, see:

In Part 2, we explore key design aspects of Solana that shape our investment thesis, addressing common misconceptions prevalent in the public crypto discourse. While some basic understanding of DLT is helpful, we aim to make this series as accessible as possible for non-technical readers.

Despite some solid explanations already available — like those from Helius, Shinobi, Four Pillars, and Anatoly himself — we see a need for another “how Solana works” piece. At DeepWaters, surveying across our network, we reveal that those explanations remain quite technical, requiring a firm grasp of blockchains and cryptography. This makes it hard not just for newcomers, but even for a great number of regular investors, to fully experience that “a-ha!” moment where everything clicks.

The articles in Part 2 have two main objectives:

- Help readers grasp Solana’s architecture in a way that creates a “click” moment, especially for non-technical audiences.

- Provide insightful context to help readers not just understand Solana’s design, but also appreciate its trade-offs and unlocks, offering a deeper understanding of the impact behind those choices.

The following content is probably not the best way to learn about blockchain designs from scratch. But it will be valuable for anyone who has spent time in the space and whose mental map of Solana and other chains looks like this:

a typical Solana Salad you’ve likely got in your head

We’re going to walk through the most important elements of Solana design and touch upon some misconceptions along the way, meticulously untangling the Solana knot.

A couple of disclaimers:

- We’re not going to cover every element from the picture above — only the ones we consider absolutely essential to help you clear up the confusion. We believe it’s this attempt to explain “all things Solana” in one read that often leaves people struggling to understand how it works and what makes it unique.

- Our goal is to provide a framework for understanding that you can build upon with further reading about other aspects of Solana. Ideally, after this, you’ll know where to place those pieces in your mental map.

- We will scaffold-up sequentially from piece to piece rather than diving into a detailed, interconnected analysis right away, and we’ll do so in the form of an essay rather than a report.

- As we go, we’ll touch on some misconceptions that persist about Solana’s design, focusing not only on how things work in practice but also on the implications and trade-offs involved.

- At DeepWaters, we’re primarily investors in this space. Though we’re not a VC, our content is mostly written for other investors. That being said, nothing here should be taken as financial or professional advice. If you think you spot any, feel free to DM the author — he’ll explain who you are.

- Coming with “explain-it-all-for-me” piece, we will inevitably cut some corners and over-simplify complicated concepts. If you showed this to a developer or someone deep in the Solana ecosystem, they might say, “Well, it’s actually not that simple,” and they’d probably be right. There’s always a trade-off between simplicity and accuracy, and this piece is meant to serve as an initial foundation for deeper exploration, not a comprehensive walk-through.

I’m personally glad to see more and more people appreciating Solana as an asset in 2024. However, it’s interesting that even those who have switched sides to support the ecosystem often attribute Solana’s performance to… well, the wrong factors. It’s not just data centers or parallel execution that make it so fast and affordable, even though those aspects do play a significant role.

The main contributing factor to Solana performance in our view is:

a wall clock.

Personally, if I were, gun to my head, forced to name only one element of Solana that unlocks the most of its capabilities I would say “the cryptographic clock,” also known as Proof of History.

If you’re surprised or skeptical or simply clueless — this article is 100% for you. Let’s dig in.

Proof of History Concept

Proof of history (PoH) is a cryptographic function that quite literally simulates the way of clock work.

“Introducing a cryptographic clock as a major scalability unlock was not a common view before Solana. It was never a matter of a widespread research and concern amongst blockchain builders that without a cryptographic clock we’d never be able to achieve high transaction throughput.” — Andrew Levine, CEO & Co-Founder of Koinos Group

The very idea of introducing a clock as a scaling means is and was a counterintuitive direction to take for any blockchain developer. Anatoly himself said many times it is this very idea (later called PoH) that started the whole thing. It was his “a-ha” moment.

“I literally had two coffees and a beer, and I had this eureka moment at four in the morning,” — Anatoly Yakovenko, via Cointelegraph.

A darn clock. How exactly can a clock scale anything?

Well, if you think about it: the way the clocks work is not only standardised sequence of numbers with standardised intervals between them but the sequence and intervals that are identical for everyone on Earth. Think for a minute just how much of human coordination is globally enabled by such a simple little thing we take for granted.

Coordination is what blockchains have to enable, being permissionless decentralised systems. And as it turns out, coordination without a clock is problematic.

When we think about blockchains and we observe that some of them are slow and not performant, like Bitcoin or Ethereum, we might wonder why it is the case, and many people believe it’s due to the hardware requirements. You switch over to run it on beefy machines — you’ll get the performance, no?

No. That alone won’t be nearly enough.

Hardware isn’t the root cause of blockchain performance limitations. While computational power certainly influences performance at multiple levels, it’s not the primary bottleneck since blockchains mainly execute simple read and write operations to update network state, unlike resource-intensive tasks such as AI training. If performance depended solely on individual machines (nodes, validators, or miners), we wouldn’t face significant constraints — even basic modern laptops could process tens of thousands of transactions per second. So long as they do it alone.

The scaling issues begin where the network begins, i.e. where you go up from one machine to two machines and further on. It happens because in this case you introduce the algorithmic restrains on top of the hardware restrains. What happens here is protocol introduction. A protocol follows an established set of rules in order for machines to communicate with one another.

Protocols emerge as the true limiting factor because they dictate exactly how machines must behave. They establish the rules for coordinating and synchronising operations across remote computers, giving rise to what’s known as communication complexity.

What is the communication complexity? Put simply, it means computers can’t simply receive some information and then carelessly produce the block as they see fit. Instead, the process works like this:

- You receive some information.

- You must then verify the validity of that information.

- Then you need to “consult” with the other computers to confirm their agreement with this information.

- This means you have to go talk to every computer, wait for their approval or disapproval.

- Only then, if all is fine, can you proceed to generate a collective proof that says, “Here’s the consensus we’ve reached.”

This multi-step process, with the requirement to communicate and reach consensus, adds significant complexity compared to lonely machine processing information.

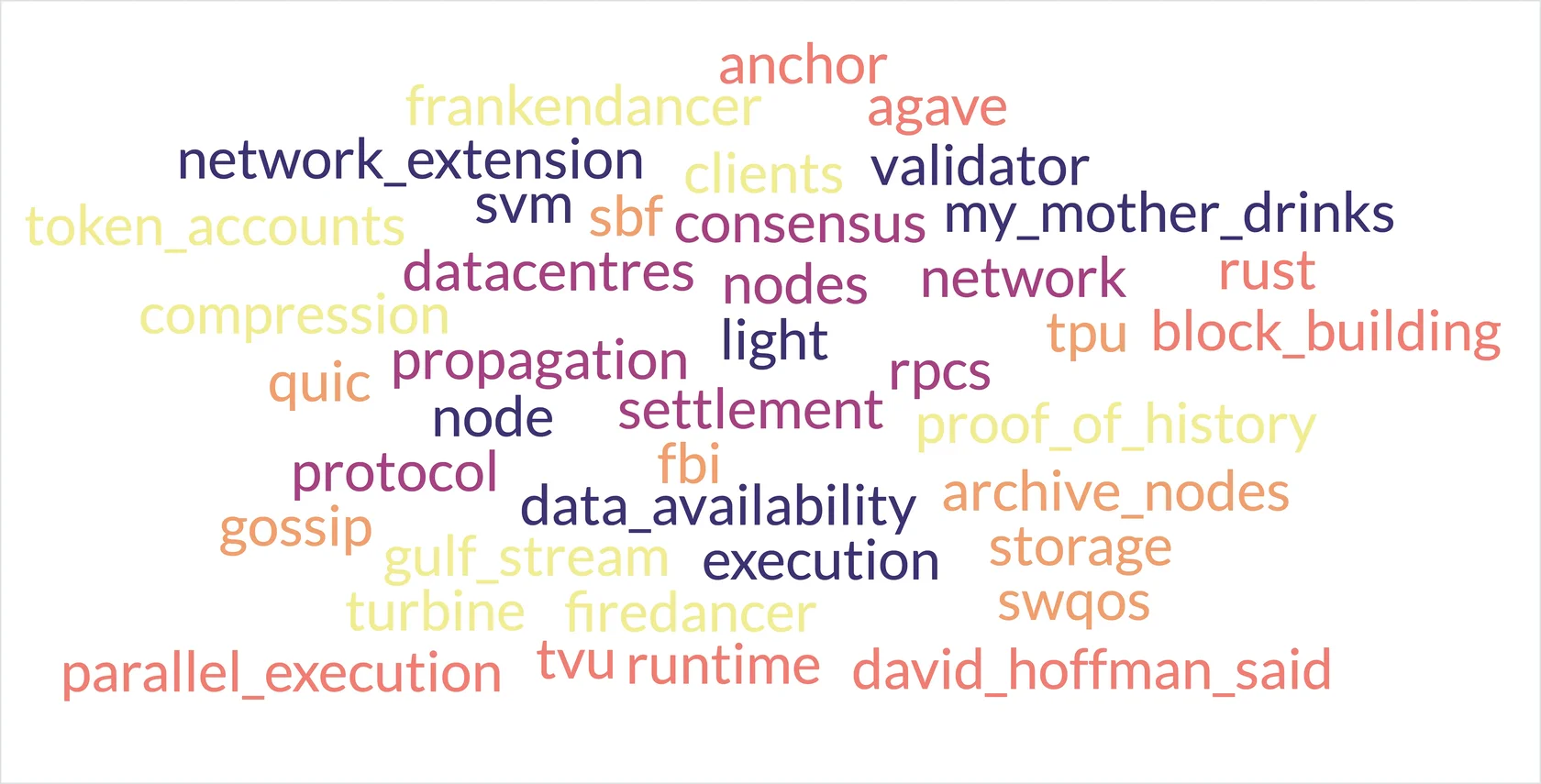

This bureaucratic process has a fancy name of “signature accrual”, and it is essentially a performance killer. The more you talk, the less you work — we could say. As nodes talk, their communication complexity grows cubically (that’s O(n³) in tech-speak). To put that in perspective, with just 10 nodes in your network, you’re looking at 1,000 messages flying back and forth. Add more nodes, and watch that number skyrocket on.

What if you have a couple thousand nodes, like Solana? What if you have more? I mean, your system is permissionless, so people just keep joining in — what do you do? How do you scale? Necessity for communication between nodes escalates the overhead exponentially as more nodes are added, making the system exponentially inefficient.

PBFT variations are what most modern chains use for consensus. Source.

It’s literally all about the math models, i.e. “how do we get the communication complexity down?” So, the bottleneck is not computation or storage. It’s mathematics. It’s the communication overhead.

The more distributed you become, the worse your performance becomes as the more nodes in your system have to go around asking each other: “This is what the current order of events looks like to me. Is it the same for you?” This is what it essentially means — “to reach consensus.”

So, in order for the nodes to figure out consensus, they have to undertake multiple rounds of communication and this is what slows blockchains down more than anything else.

Aight, gud. What does a clock have to do with any of that?

Think about how many times you've seen it in the news or movies: a kidnap victim holding up today's newspaper, a time traveler proving their journey with a relevant news headline, or an explorer documenting their discovery alongside a dated periodical. This simple yet powerful act of including a current newspaper in a photograph has become a universally recognised method of proving when an event occurred.

What makes this technique so compelling is its verifiability. Anyone can potentially obtain the same newspaper, allowing for independent confirmation without relying on the photo’s provider or any third party. This universally understood concept transcends cultural and linguistic barriers tapping into our innate understanding of temporal order.

We understand that the paper must’ve been published before the photo was taken. We understand we can go buy this paper to compare the cover and make sure it is indeed the source of truth we can refer to. We understand while reading a newspaper that the event we read about must’ve happened before the paper was composed and the composition could only have happened before publishing.

Imagine what’d happen if we were deprived of this understanding. I could not even attempt that task, to be honest with you, so wired my brain is to the temporal-ordered system. I asked some AIs to help me out and come up with what the process might look like if we were incapable of understanding such things:

“You decide to cook a simple meal: spaghetti with tomato sauce. Normally, you’d follow a sequence — boil water, cook the pasta, prepare the sauce, mix, and serve. But without the sense of temporal order, your ability to perceive or execute tasks in the correct sequence is gone.

You stand in the kitchen holding a box of pasta, aware that you intend to cook, but the steps blur together. Is the water already boiling? Did you chop the garlic? Did you add salt? These questions seem meaningless since each action feels detached from time.” — GPT 4–o

It is a severe condition.

“Imagine you’re at a busy coffee shop, but your perception of time’s flow has suddenly vanished:

You’re aware you want coffee, but the usual sequence of events becomes a confusing blur. Are you in line? Have you already ordered? Did you just finish your drink?

Conversations around you become a jumble of words and phrases, with no clear beginning or end. You hear the barista call out your name, but you can’t tell if it’s to take your order or hand you your drink.

The aroma of fresh coffee permeates the air, but you can’t discern if it’s from the cup in your hand, the beans being ground, or the espresso machine in action.” — Claude

Hell.

“A person who is deprived of a sense of temporal order might experience confusion when presented with information about the kidnapped loved one’s survival. They may struggle to understand the sequence of events or the meaning of the ransom video and newspaper evidence. They might repeat questions or actions, or appear anxious and uncertain. It’s important for them to receive clear, simple explanations and reassurance to help them process this information.” — Blue Plaster.

The very mentioning of repetitions of questions and actions given in this answer strikes me as remarkable — this is exactly what nodes do on a blockchain. They ceaselessly ask each other “what’s up” until they can establish with high degree of probability what is actually up.

Here’s how Helius explains it:

Imagine a busy city intersection with no traffic lights. It would be absolute chaos with cars, trucks, bikes, and pedestrians all trying to go their own way, vying for their turn to cross. There would be accidents, misunderstandings, and distrust between participants. Thankfully, we have traffic lights. Traffic lights bring order; telling who to go and who to stop, adapting to real-time conditions. Most importantly, everyone agrees on traffic lights. We all agree to the rules, and that the rules are applied uniformly.

You get the gist.

What enables our coordination (and the entire global economy) is the notion of temporal order, of which “time” is the most crucial construction and “clock” is the universally agreed upon means of common reference. It doesn’t have to be “seconds” and “minutes” and “days”. The most important function here is to be able to chronologise events sequentially.

Without it, it would make whatever you do a dozen times slower, if at all feasible. And this is exactly what a network of computers (validators) have to go through every single block in many blockchains. They have to, essentially, somehow go about and figure our what happened after what and verify it happened indeed. And the way they do it is, as Blue Plaster said, “repeat questions and actions.”

Absence of inner temporal order → Necessity to communicate in multiple rounds → Overhead → Slow economy.

Slow economy cannot unlock the most valuable use cases and drive humanity forward.

By giving computers a reliable source of temporal reference via a notion of cryptographic clock we can get rid of aforementioned overhead.

This is why, according to Anatoly, he couldn’t sleep until 4 a.m., driven by excitement after gaining the cryptographic clock insight. That moment marked the birth of Solana. It wasn’t even called Solana at first. You can watch Toly explaining the whole concept in one of his early talks. Notice how difficult it was for people to fully grasp or appreciate the idea back then.

And it still is. Even though it is essentially very simple: Solana proceeds on the assumption of constructing a notion of time before constructing a notion of agreement in the system.

Proof of History Design

Now let’s get deeper into the weeds and try to grasp the design and how exactly it all works.

Some inputs first:

- “Function” — a reusable piece of code that takes in certain inputs (like ingredients for your cookies), does something with them (follows the recipe), and then gives you an output (the cookies).

- “Hash” — a fingerprint. Just like every person has a unique fingerprint that represents the entirety of their body, a hash is a unique code that represents the entirety of some information. You can think of it as a shortened version of the original data. The main property of such fingerprint is that it’s practically impossible to recreate the original data from it.

- “Hash function” — making a fingerprint designed to be one-way (you can’t reverse it to get the original data), and collision-resistant (two different inputs shouldn’t produce the same hash).

Good.

Think of a hash function running “in a loop”, i.e. producing a sequence of computations where each output becomes the next input. For example, consider hash function SHA-256 (the Bitcoin’s one): there’s no way to predict what the next input will be at any given point. The process continues indefinitely, generating results like this:

- e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855

- 6f1ed002ab5595859014ebf0951522d9b0d9f3d8c3e68d5e00b9da0d6d4d3a58

- 3c9e851b2f8fb4b5de30e56b3f4cc92b292c83d18dbb8f2db57be7d2fd2da12c

- cf5b16a778af8380ae5f00bca32045c4864c6c942758fca0ba9d2a6f978bf6d5

- d4735e3a265e16eee03f59718b8bec21dbd4d4f5e77c6141f4727d1c1d6a4489

- etc.

And on and on they go “ticking” like a clock appending one by one and forming the sequentiality where each tick can be numbered, naturally constructing a ladder whose height is going up always.

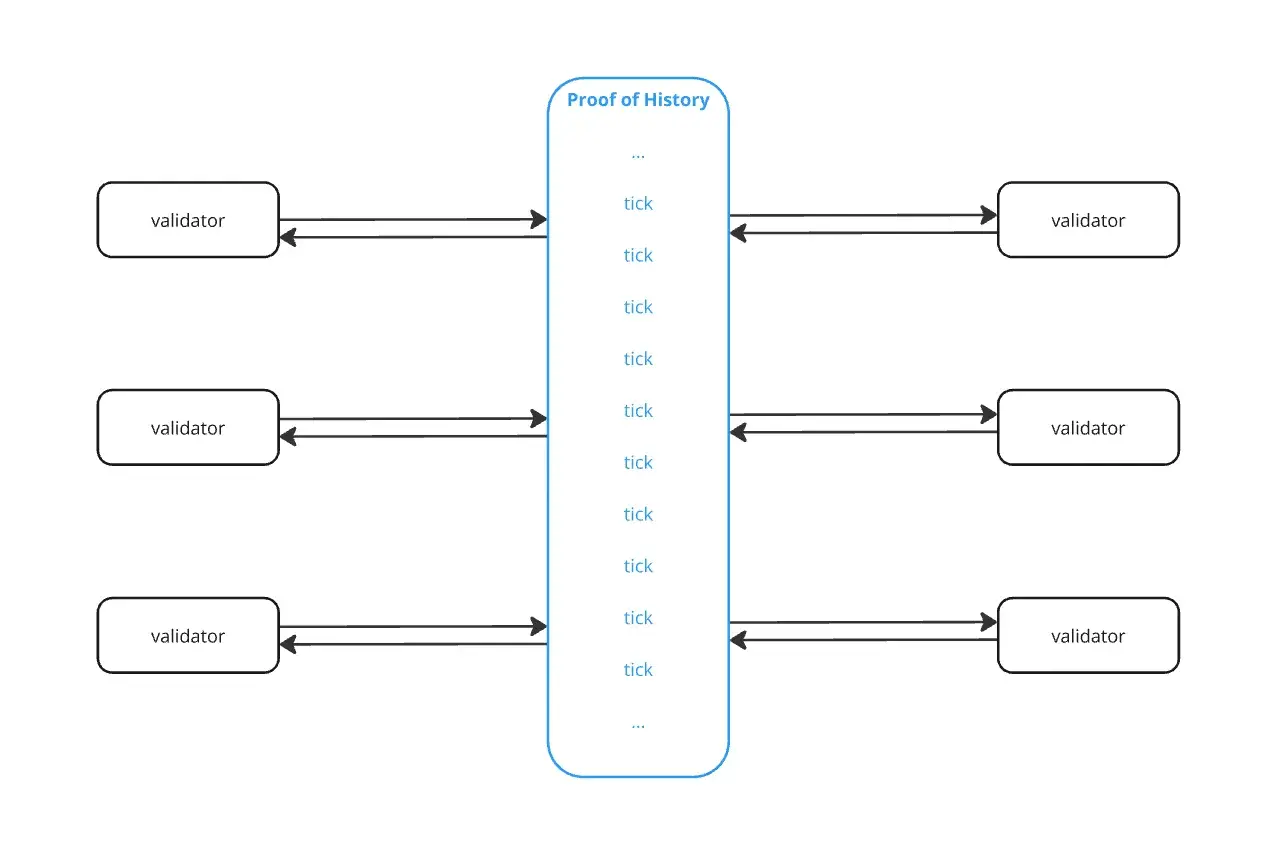

All validators run this mechanism on their CPU constantly, as quickly as they can.

Here’s how you can think about it for now

Although we can’t make it function natively in human timeframes like “one second,” we can encode moments in time as values that roughly translate to human terms.

For instance, a result from a certain number of iterations could correspond to “1 second” in our terms, and after additional iterations, it might represent “2 seconds,” and so on because we know “how long it takes” for SHA-256 to be computed in human terms. SHA-256 production rate is surprisingly narrow across modern computers, and this is what makes it such a good choice for a universal “clock”.

By supplying an initial value along with the result from the specified number of iterations, a validator can demonstrate that approximately “1 second” was spent running the iterative SHA-256 hash function. We can use this clock to aim at any target interval we have in mind. For example, 400ms because we aim to be lightning fast.

“If the PoH service creates a chain of a thousand hashes, we know time has passed for it to have calculated each hash sequentially — this can be thought of as a “micro proof of work.” — Helius Blog

This stream of data essentially creates an endless ladder of “ticks” and that is literally all there is to it. It’s a dumb darn clock. Or you can think of it as a ladder because it’s not actually circular. That’s why we say PoH is not Solana consensus. It’s a separate thing that runs autonomously, in parallel to consensus.

However, it empowers consensus very much because we can take any data and “compare” it against this clock to solve the problem of ordering — i.e. “when did our data arrive?”

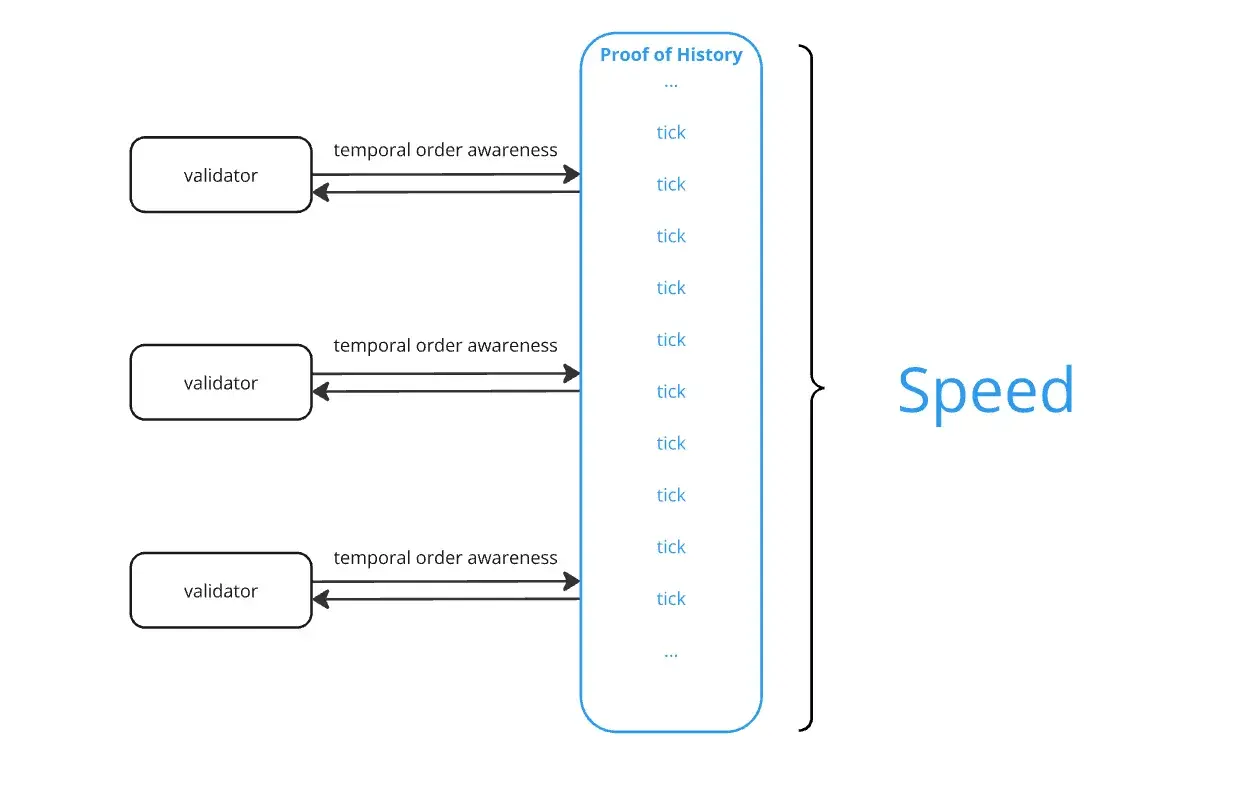

Put simply, even though here we do not operate in human timeframes like “second”, we’re still adhering to a unified “sense of time passed” and thus, we’re able to order events (e.g. Solana transactions) chronologically without making machines go talk to each other multiple times.

Instead of undertaking multiple rounds of synchronisation node-to-node, Solana “samples” this computational ladder at regular intervals (every time a block must be produced), with these samples forming the blockchain data structure. Boom.

Elegantly, it’s a way of encoding both the passage of time and the sequence of events in a single data structure. That’s why it’s called proof of history, and it is indeed quite a unique thing because essentially it means that everything in the network is derived from the data itself. There’s no weak subjectivity as you do not rely on external time inputs or arbitrary agreement among nodes.

PoH generates its timing from the data being processed. This means the timing is intrinsic to the system itself. On such a ladder, you could theoretically synchronise nodes between Mars and Earth if need be, which wouldn’t be possible with human timestamps because Mars’ solar day is “37 minutes” longer than on Earth.

When I open and observe events on this ladder, I can just “know” that “these” two particular events happened within “this” amount of time from each other. I don’t have to request any info about said events from the network (no communication overhead). We can prove that the data was created sometime before it was appended. Just like we know that the events published in the New York Times occurred before the newspaper was written.

Proof of History Properties & Unlocks

Intervals on the PoH clock indicate that some computation (therefore, time) has been expended by a participant. However, this method wouldn’t be entirely optimal alone. Why? Because while the future value of a cryptographic function is unpredictable, it can still be computed. With sufficient computational power, one can quickly iterate toward the desired value. Consequently, someone with even more computing resources could reach that answer even faster.

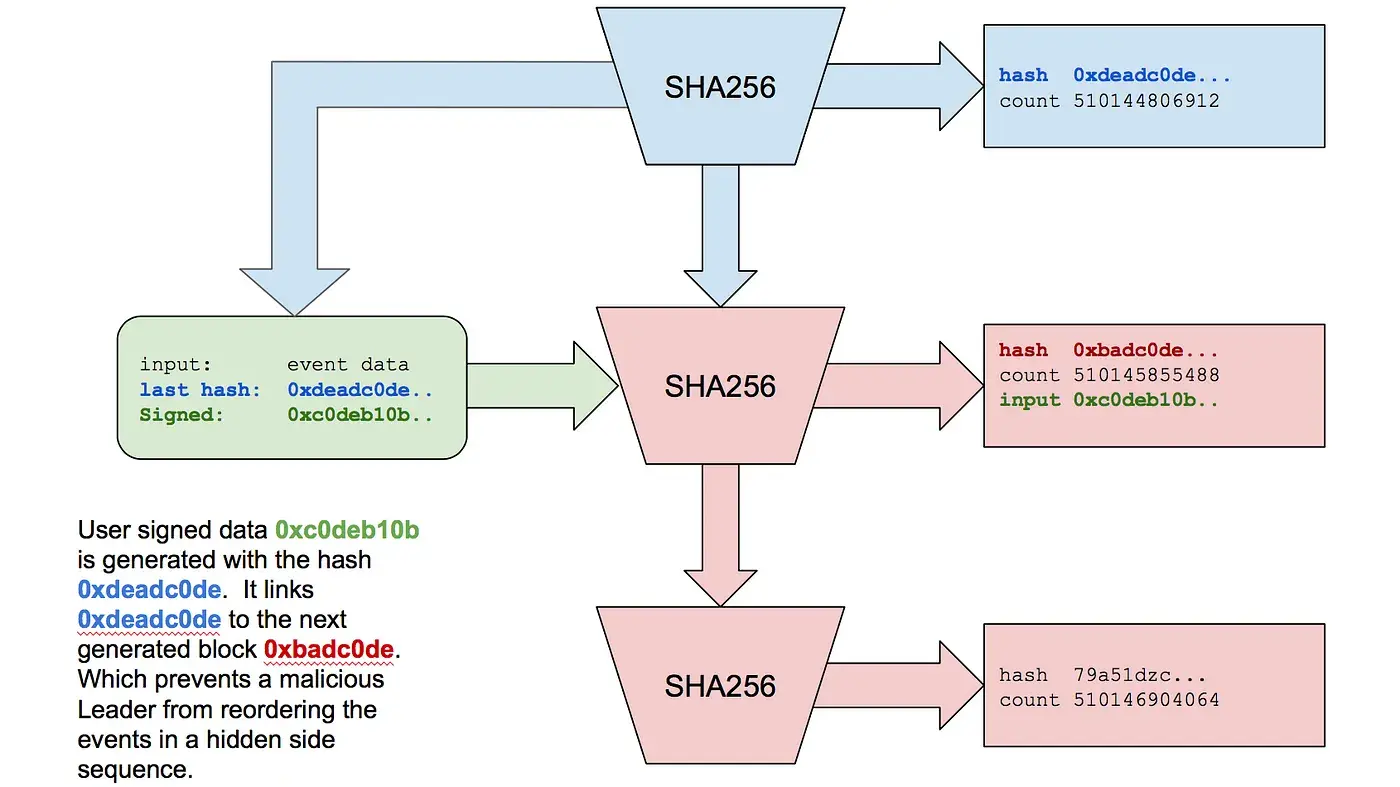

So, what’s the solution? You can encode messages within this structure by inserting a blob of data just before a specific point (where the sample is taken). This “insertion” alters the entire computation down the line, leading to unpredictable changes in all subsequent hashes. This is the place where “Solana the blockchain” is given birth as those “insertions” are exactly what happens when validators add transactions.

So the PoS blockchain and PoH clock mechanism reinforce each other — the blockchain strengthens the clock, while the clock provides secure timestamping for the blockchain. This synergistic relationship makes the overall Solana architecture powerful and self-sustaining.

- Therefore, it empowers blockchains and obtains additional useful properties from it at the same time.

I as a block builder can take a previous hash that’s part of this historical record, put it in my blob of data (Solana transactions), sign it, and insert it into the hash that’s being currently generated on the ladder.

“This is just like taking a photograph with the New York Times newspaper in the background. Because this message contains 0xdeadc0de hash we know it was generated after count 510144806912 was created.” — Anatoly Yakovenko, source.

Now we have this continuous stream of signed data pieces that point back in time to some previous data. This way, a malicious actor cannot reverse the order of any of these events because it would change the previous hashes.

“And we only need one core in the world to run this. And we can record every event that’s happening in the world with cryptographic certainty. So, a hundred years from now you can observe the history and know that this event happened now and this even happened “two seconds” from that.” — Anatoly Yakovenko, co-founder.

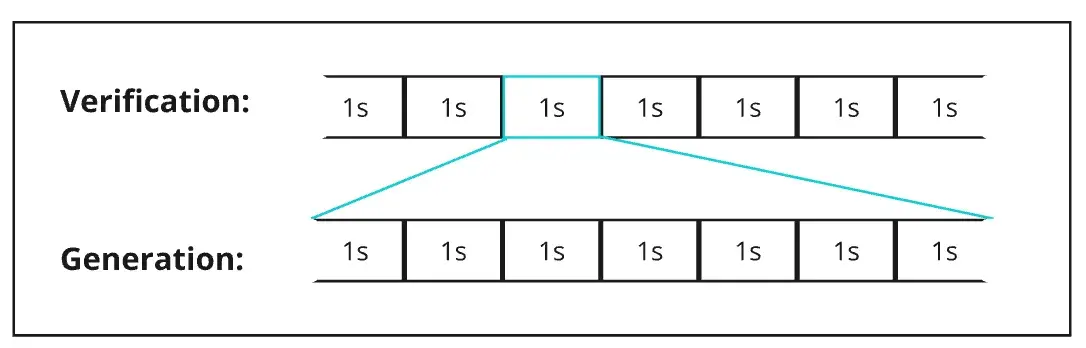

What’s impressive about this system is that, although producing events on this computational ladder takes time, verification can occur more quickly using a separate core of your computer. This means that other validators can simultaneously verify the correctness of thousands of hashes (events) at a significantly faster rate than they were originally generated. Since each hash has been broadcast across the network, they are already known and accessible for validation right away.

The figure of ‘1s’ is arbitrary, used solely for illustrative purposes.

- Therefore, PoH is difficult to produce but easy to verify.

Generation happens real time but verification is parallelisable. With modern GPUs like the RTX 4070, equipped with an impressive 7,680 cores, verification speeds can reach remarkable levels.

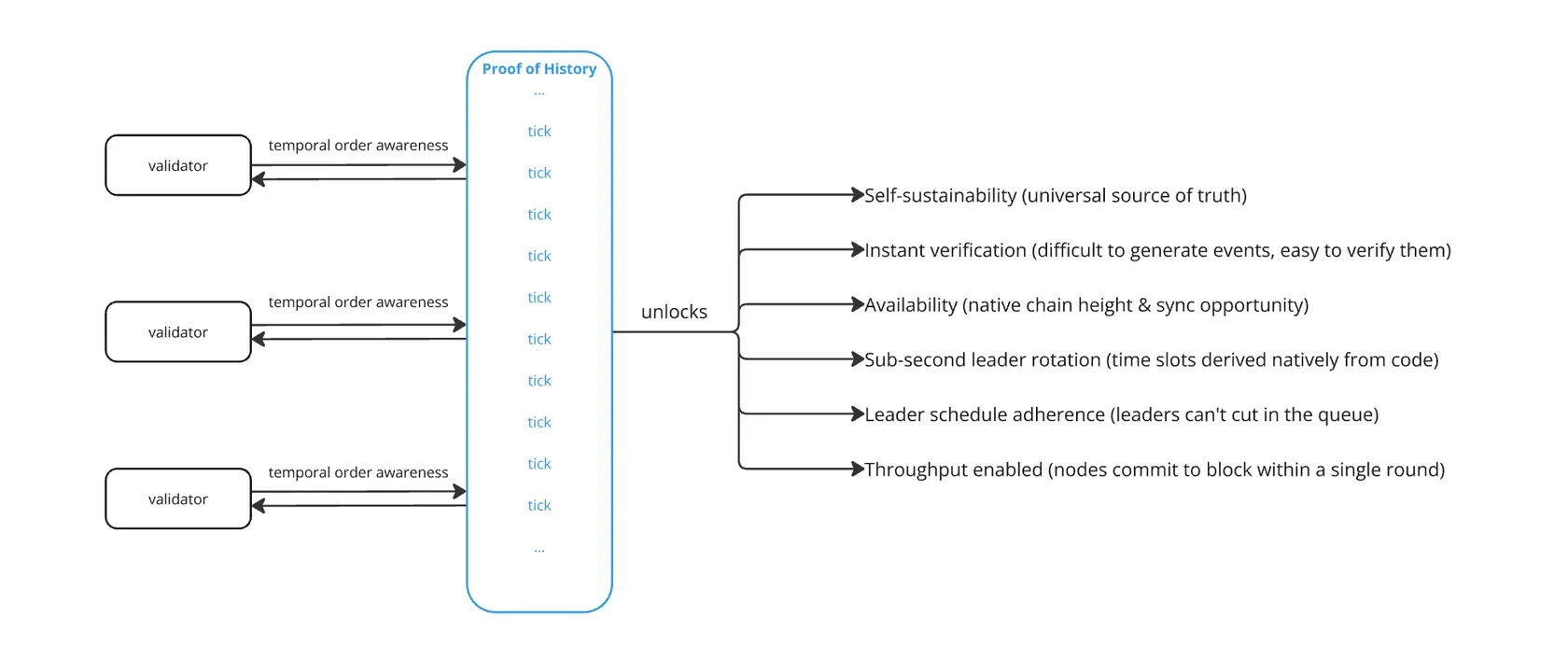

Another important aspect is availability. In the event of a significant network partition, most other blockchains would need to undergo a hard fork, determining which side of the network is valid. This could render all transactions on the other side invalid and orphaned. However, proof of history allows you to recover information from any point allowing separated systems to “know” what happened while they were separated.

- Therefore, PoH encodes availability directly into the ledger, representing the true height of the blockchain within it.

There must be an orderly sequence of blocks submitted, otherwise multiple nodes would submit blocks at the same time. Therefore the network can only be fast if only one node at a time submits the next block to be considered for inclusion in the blockchain. Which is why Solana makes a “leader schedule” for all nodes to know who produces the next block.

However, the problem is — there is no perfect way to synchronise on sub-second boundaries in human terms of temporal order (“seconds” , “milliseconds”). It is true that there are methods for establishing synchronised time between distant computers, but those methods require cooperation between nodes (=overhead), and a dishonest node could provide false time information (as it’s not intrinsic to the system), or just go out of turn and claim that it is everyone else whose clock is wrong while their own clock is the correct one (weak subjectivity). A given node that sees a block emitted by some leader that went “too early” now has to decide if it’s that other node whose clock is correct, or their own.

- PoH is derived from a hash function, therefore, allows for smooth and fast leader rotation as is shown on this dashboard.

This Proof of History data included with the block shows that the block was emitted during its slot and not at any other time, which is quickly and easily verifiable by any validator. Also the block of course contains the signature of the leader who emitted it, allowing other validators to quickly prove that the block was emitted by the proper leader for that slot. And so blocks can easily be checked to ensure that they were emitted by the correct leader and at the correct time.

“This feature is what allows Solana leaders to follow in leader sequence with minimal overhead, which is the main factor that makes the Solana network so fast.” — Shinobi

And because the leader schedule must be respected according to these rules, it is censorship resistant, which means that a validator cannot exclude another validator by omitting a competing block in a legitimate leader’s slot. See examples here.

- Therefore, PoH allows for reinforcing adherence to the leader schedule making the process not only smooth but censorship resistant.

Consensus emerges as an agreement on temporal order. In a system where temporal ordering is natively incorporated, the block commitment process becomes streamlined.

- Therefore, PoH significantly reduces consensus overhead — though it doesn’t completely separate consensus from throughput as some explanations might suggest.

This optimisation is key to Solana’s scaling approach. By leveraging PoH’s verifiable time ordering, nodes can commit to a block within one round of communication — an effective solution, even if full finality still requires additional rounds for safety. Nonetheless, PoH is what largely enables Solana to transcend networking boundaries and encounter limitations at the level of pure physics. Essentially, such a system scales as a function of cores added to the validators’ hardware.

Conclusion

For now, we can revise the initial mental map we presented earlier as follows:

Proof of History unlocks crucial setups and properties for the system that leverages it.

Even though some of these unlocks focus on specific security guarantees rather than directly pursuing scaling, overall, if we were to capture the essence of the situation, we could revise the picture as follows:

Eliminating consensus overhead is the key here, and PoH plays a major role in enabling this, allowing the network to fully utilize available bandwidth for data transfer at maximum speed.

In the following articles we’re going to further dive a little deeper into the woods and explore the downstream design choices that are enabled by the cryptographic clock Solana built.